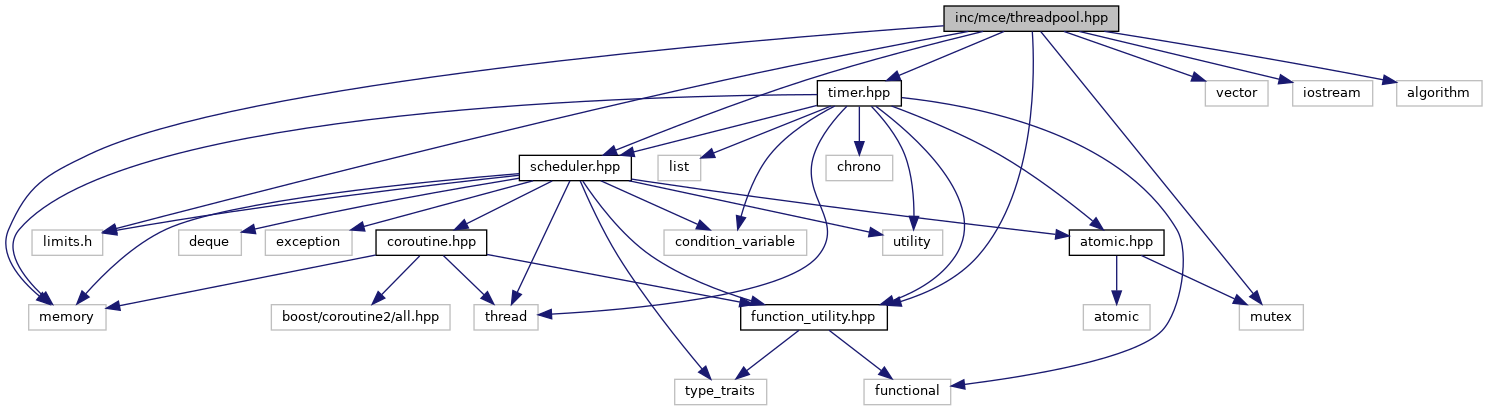

#include <limits.h>#include <vector>#include <mutex>#include <iostream>#include <algorithm>#include <memory>#include "function_utility.hpp"#include "timer.hpp"#include "scheduler.hpp"

Go to the source code of this file.

Classes | |

| struct | mce::threadpool |

Functions | |

| mce::threadpool *& | mce::detail::tl_this_threadpool () |

| bool | mce::in_threadpool () |

| Return true if calling context is running in a threadpool. | |

| threadpool & | mce::this_threadpool () |

| return a reference to the threadpool the calling code is executing in | |

| bool | mce::default_threadpool_enabled () |

| return true if default_threadpool() can be safely called, else false | |

| threadpool & | mce::default_threadpool () |

| return the default threadpool's | |

| double | mce::balance_ratio () |

| return the balance ratio, set by compiler define: MCEBALANCERATIO | |

| scheduler & | mce::detail::default_threadpool_scheduler () |

| scheduler & | mce::detail::concurrent_algorithm () |

| scheduler & | mce::detail::parallel_algorithm () |

| scheduler & | mce::detail::balance_algorithm () |

| template<typename... As> | |

| void | mce::concurrent (As &&... args) |

| Launch user function and optional arguments as a coroutine running on a scheduler. More... | |

| template<typename... As> | |

| void | mce::parallel (As &&... args) |

| Launch user function and optional arguments as a coroutine running on a scheduler. More... | |

| template<typename... As> | |

| void | mce::balance (As &&... args) |

| Launch user function and optional arguments as a coroutine running on a scheduler. A best-effort algorithm for balancing CPU throughput and communication latency. More... | |

Detailed Description

threadpool executor API

Function Documentation

◆ balance()

| void mce::balance | ( | As &&... | args | ) |

Launch user function and optional arguments as a coroutine running on a scheduler. A best-effort algorithm for balancing CPU throughput and communication latency.

This algorithm sacrifices initial scheduling launch time to calculate whether the workload of the current threadpool needs to be rebalanced or else preferring scheduling on the calling thread's scheduler. That is, it is slower than either mce::concurrent() or mce::parallel() to initially schedule coroutines (it has no further effect on coroutines once they have been scheduled the first time). However if initial scheduling of coroutines is not a bottleneck, this mechanism may be used to encourage balanced usage of computing resources without unnecessarily sacrificing communication latency gains.

This algorithm may be most useful in long running programs, where neither CPU throughput nor communication latency are the highest demand but consistency is*. In such a case, the user may consider replacing many or all usages of mce::concurrent() with mce::balance(), exchanging a minor scheduling cost for a long term stability increase..

Template value RATIO_LIMIT the maximum ratio value of dividing the largest by the smallest load before rebalancing. For instance, with a RATIO_LIMIT of 2.0, the scheduler with the heaviest load must be twice as loaded as the lightest scheduler before rebalancing occurs.

The operating theory of this algorithm is that if rebalancing occurs only when it is truly necessary, then the newly scheduled coroutines will themselves schedule additional operations on their thread (instead of the thread of their parent coroutine), allowing the workload to balance naturally. Manual rebalancing will recur until the scheduled coroutines are naturally scheduling additional coroutines on their current threads in roughly equal amounts.

- Parameters

-

f a Callable as... any Callable arguments

◆ balance_algorithm()

|

inline |

scheduler with least workload

result of scheduler::measure() with the least load

result of scheduler::measure() for the worker with the greatest load

◆ concurrent()

| void mce::concurrent | ( | As &&... | args | ) |

Launch user function and optional arguments as a coroutine running on a scheduler.

Prefers to schedule coroutines on the thread local scheduler for fastest context switching (allows for fastest communication with other coroutines running on the current thread) with the default threadpool as a fallback.

This is the recommended way to launch a coroutine unless multicore processing is required. Even then, it is recommended that child coroutines be scheduled with concurrent() as communication speed is often the bottleneck in small asynchronous programs.

◆ parallel()

| void mce::parallel | ( | As &&... | args | ) |

Launch user function and optional arguments as a coroutine running on a scheduler.

Prefers to be scheduled on the current threadpool for maximally efficient CPU distribution (allows for greater CPU throughput at the cost of potentially slower communication between coroutines), but will fallback to the thread local scheduler or to the default threadpool.

If some coroutine consistently spawns many child coroutines, it may be useful to schedule them with mce::parallel() instead of mce::concurrent() to encourage balanced usage of CPU resources.