EB corbos Linux SDK

Overview

EB corbos Linux built on Ubuntu is a long-term maintained embedded Linux distribution focused on automotive ECUs. Elektrobit can provide security patches for a frozen package set for up to 15 years on quite low pricing. To realize this, Elektrobit partners with Canonical. EB corbos Linux uses many Ubuntu packages, qualifies these packages for automotive embedded use-cases in reference images, and adds additional embedded optimized components, to create an industry grade embedded Linux build toolkit.

In contrast to general purpose Linux distributions, EB corbos Linux allows a user to create a completely customer specific image from scratch in a reproducible way. This is realized using this SDK. A free variant of EB corbos Linux is available at the Elektrobit homepage. To kick-start the development of new ECUs, a full EB corbos Linux release also contains pre-qualified reference images which already implement typical automotive use-cases. The free variant doesn’t contain proprietary hardware drivers or pre-qualified reference images. Please contact Elektrobit sales to get a full evaluation package of EB corbos Linux.

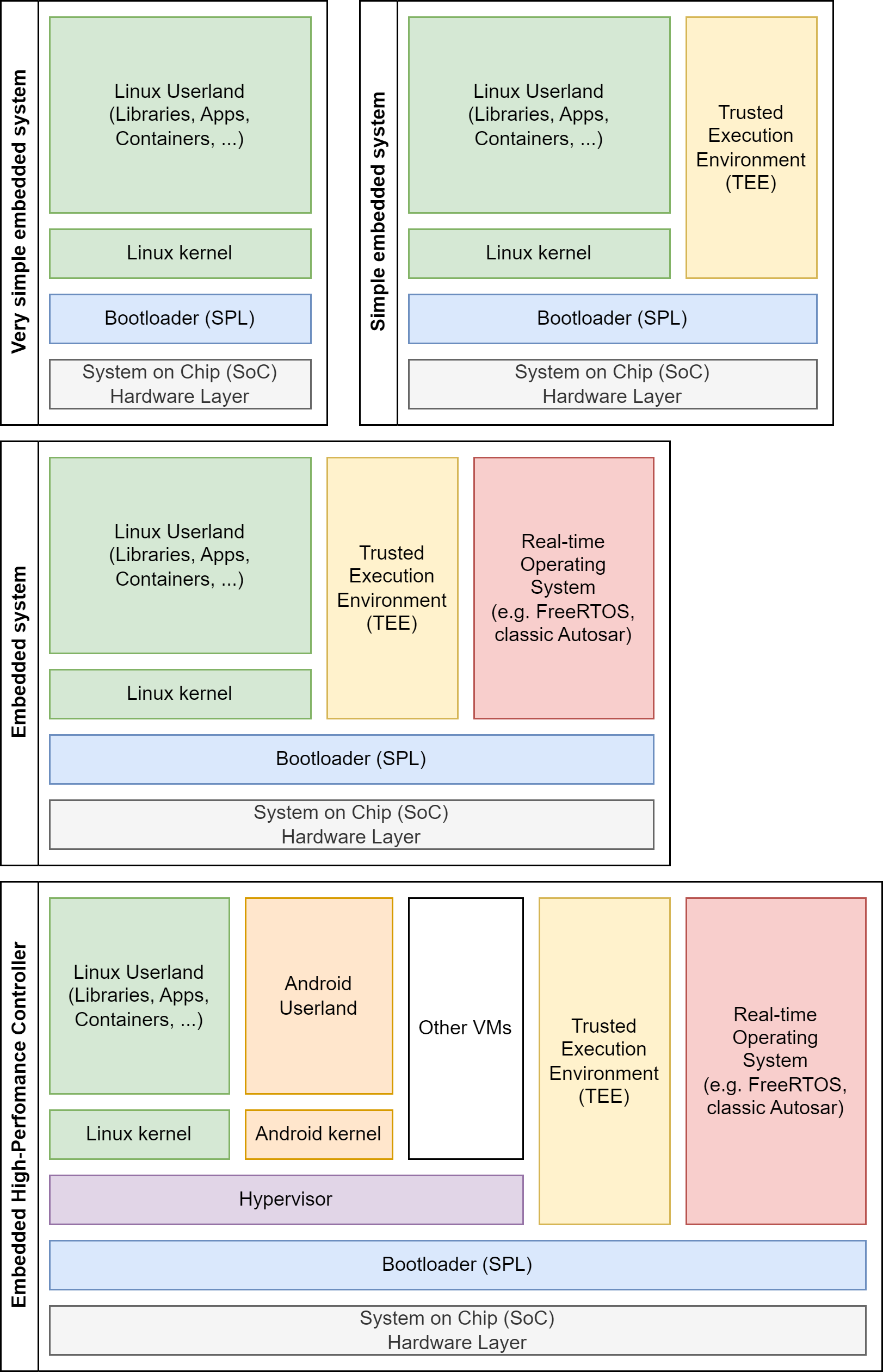

The image above shows a range of embedded system architectures.

Very simple embedded systems run only a bare-metal Linux. An example for such a system is the Raspberry Pi running Raspberry Pi OS. Such images can be easily directly generated with tools like elbe, kiwi-ng or debos, but this architecture doesn’t fit industrial needs.

For real world industrial solutions at least secure boot is required, and typically a Trusted Execution Environment (TEE) is involved in the secure boot process. This is depicted above as a simple embedded system. Such images may already require a more complex partition layout, depending on the bootloader and SoC requirements.

In the automotive world, in addition to a Posix OS, typically also a safety certified realtime OS like classic Autosar is involved. This is depicted above as an embedded system. If this is combined with an A/B schema for the update of the Linux and the classic Autosar the storage layout gets quite complex and can hardly be directly created with the tools mentioned above.

Our day to day business at Elektrobit are automotive high-performance controllers (HPCs). HPCs extend the embedded system architecture with a hypervisor and multiple virtual machines (VMs), like an additional Android VM for infotainment solutions. The target of EB corbos Linux, and this SDK, is to fully support such embedded high-performance controller system architectures, and allow development and maintenance of such systems in an easy, efficient and reliable way.

This repository provides a template workspace to start developing your own Linux images and applications. It’s based on a dev container to provide a consistent build environment. This dev container can also be used stand-alone with other IDEs or in CI environments. For more details about the container and stand-alone usage look at the dev container repository.

Setup

The EB corbos Linux template workspace is tested using Ubuntu 22.04 and Ubuntu 24.04 host environments on x86_64 machines. It is not compatible with other host CPU architectures, but arm64 host support is planned for a future release.

The build host needs to provide a Docker installation and a Python 3 installation, including Python3 venv. Docker needs support for running privileged containers.

The EB corbos Linux template workspace is based on a dev container, and is not using VMs for cross-building. This simplifies the setup and provides good build speed, but it requires support for executing non-native binaries if images for foreign architectures shall be built. To make this work, the host needs to support binfmt. On Ubuntu hosts, binfmt can be enabled by installing the packages binfmt-support and qemu-user-static. To allow mount operations which are required during image build, a privileged execution of the container is necessary, and the /dev folder needs to be bind-mounted into the container to allow access to newly created losetup devices. Running other workloads on the build host may cause issues, since binfmt and losetup configure the kernel and therefore modify the host environment for all running processes and containers.

The following sections assume that you don’t have an Ubuntu 22.04 or 24.04 host OS and use the Remote SSH feature of Visual Studio Code to connect to a remote environment as build host. This will work if you can SSH into the build host and doesn’t require UI-support on the build host. On Windows, WSL2 should also work.

Optional: Prepare Virtual Box VM

If you don’t already have an Ubuntu development host, you can create a new one using VirtualBox, a free hypervisor available for many operating systems.

First download an Ubuntu ISO image. For preparing this section, I used an Ubuntu 24.04 server ISO, since a desktop UI is not needed. Then download and install VirtualBox, and create a new virtual machine with the following options:

- RAM: 8192 MB (less should also work)

- CPU: 3 cores (more is better, less will also work)

- Disc: 100 GB (more is better, less will also work)

- A second, host-only network interface.

Skipping automatic installation will allow you to change the hardware settings before the installation, if you add the second interface after installation, you must configure it manually.

Boot the VM with the Ubuntu ISO image and follow the installation wizard. I have chosen the minimal server variant.

After installation, log in to the VM and install openssh-server, docker and git: sudo apt install openssh-server docker.io git. Get the IP address of the VM by running the command ip addr. The address starting with 192.168. is the one of the host-only interface.

For me, the address was 192.168.56.106.

Enabling nested virtualization for KVM support

The Linux KVM technology allows running virtual machines, for the same CPU architecture as the host, with almost native speed.

To make use of this in VirtualBox, you need to disable the Windows Hypervisor.

Please be aware that this may affect other virtualization tooling like Windows WSL.

To disable the Windows Hypervisor, open a PowerShell as Administrator, and run bcdedit /set hypervisorlaunchtype off. Afterwards, you need to reboot your Windows machine.

After the reboot, you can enable nested virtualization for your VirtualBox VM by editing the machine, choosing System > CPU and enabling the checkbox for nested VT-x/AMD-V.

Setup Visual Studio Code

Install Visual Studio Code on your local machine. It’s available for free for all major operating systems.

Run Visual Studio Code (VS Code) and open the extensions view (CTRL + SHIFT + X). Now install the Remote SSH and the Dev Containers extensions.

If you will not use an remote development host you can skip the next two sections and start with installing the required tools.

Prepare SSH connection

Let’s try to connect to the Ubuntu remote development host.

Open a new terminal in VS Code and type ssh <your user>@<IP of the host>. In my case it is: ssh ebcl@192.168.56.106. If it works, you are asked to accept the key, then you can login with your password.

This will give you a shell on the remote development host.

If you are on Windows, and you get an error that ssh is not available, you can install git for windows. This will also give you a ssh client.

To avoid typing your password all the time, you can authenticate with a key.

To use key authentication, disconnect from the remote host by typing exit, and then run ssh-copy-id <your user>@<IP of the host> in the VS Code shell.

If you are on Windows and get the error that the command ssh-copy-id is not known, you can use type $env:USERPROFILE\.ssh\id_rsa.pub | ssh <your user>@<IP of the host> "cat >> .ssh/authorized_keys". If you don’t have an SSH authentication key, you can create one using the ssh-keygen command.

Connect using VS Code Remote SSH plugin

Now you are ready to use the Remote SSH. Open VS Code, then open the command palette (Ctrl + Shift + P) and choose Remote SSH: Connect to host. Select Add new host and enter <your user>@<IP of the host>. In my case, I entered ebcl@192.168.56.106. Then select Linux as the host OS.

VS Code will install the remote VS Code server on the remote host, and open a window connected to this server.

If it works, you should see SSH: <IP of the host> in the lower left corner.

Pressing on this element will bring up the connection menu.

Install required tools and clone ebcl_template repository

If you start from a plain Ubuntu 22.04 installation, you can install the needed dependencies using the following command: sudo apt install docker.io binfmt-support qemu-user-static

WARNING:

When using a distribution that provides qemu in a version greater or equal to 8.1.1 (like Ubuntu 24.04) building for arm64 targets is broken due to a bug in qemu. It will fail with a message like W: Failure trying to run: chroot "/tmp/tmpp7s0kahl" /sbin/ldconfig.

We recommend using Ubuntu 22.04 or if that is not possible downgrade qemu.

his can be done for example using:

sudo apt remove qemu-user-static

wget http://launchpadlibrarian.net/690251791/qemu-user-static_8.0.4+dfsg-1ubuntu3_amd64.deb

sudo apt install ./qemu-user-static_8.0.4+dfsg-1ubuntu3_amd64.deb

The current version of qemu can be checked using qemu-aarch64-static --version.

To use dev containers, your user (on the remote machine) needs to be able to create local Docker containers.

To give your user these rights, you need to add the user to the docker group with the command: sudo usermod -aG docker $USER. The changes become active after a new login.

Close the remote connection using the menu in the lower left corner of your VS Code window and reopen the connection using the command palette or if not using a remote machine simply log out and in again.

To use the SDK, we need git to clone the remote repository (or you download it otherwise), and we need Docker to run the dev container. All other required tools come as part of the container.

Open again a shell on the remote machine, change you your preferred storage location, and clone the ebcl_template repository by running: git clone https://github.com/Elektrobit/ebcl_template.git. This will give you a new folder ebcl_template.

In VS Code, open “File > Open Workspace from File…”, navigate to the ebcl_template folder and select ebcl_sdk.code-workspace. Now you can enter the dev container by opening the remote menu in the lower left corner and selecting “Reopen in Container”. This will automatically run a local build of the EB corbos Linux dev container. The first time, when the container is built completely from scratch, may take quite some time. On my machine it takes about 30 minutes. When you open the workspace a second time, it will be a matter of seconds.

Now you are ready to start development!

Using the EBcL SDK VS Code integration

To use VS Code for developing with the EBcL SDK, choose File > Open Workspace from File and navigate to the ebcl_template location. Select the ebcl_sdk.code-workspace file. This will open the folder bind-mounted in the docker dev container environment.

Now you can use the VS Code build tasks (Ctrl + Shift + B) to build the example images and build and package the example applications.

Using the EBcL SDK container stand-alone.

If you don’t want to use VS Code, or you want to integrate the EBcL SDK in your CI workflows, you can use the dev container stand-alone. For more details on how to do this, take a look at dev container.

Using EB corbos Linux SDK on arm64

The EB corbos Linux SDK is primary developed and tested on amd64 Linux hosts, but it is also possible to use it on arm64 hosts. It was tested once on a Raspberry Pi 5 8GB successfully.

Prepare the host

Please setup VisualStudio Code and Docker as described in Setup.

Prepare the dev container

The pre-built dev container is only available for amd64 hosts, so first the container needs to be build locally on arm64. First clone the EB corbos linux dev container repository from https://github.com/Elektrobit/ebcl_dev_container. Then build the container for arm64 by running the builder/build_container script.

Prepare the workspace

Next you need the EB corbos Linux template workspace. Clone the template git repository from https://github.com/Elektrobit/ebcl_template. Then open Visual Studio Code and install the dev container extension. Open the workspace file ebcl_sdk.code-workspace. Press the “Reopen in container” button of the popup or open the VS code shell by pressing Ctrl + Shift + P and select the “Reopen in container” command.

Build an arm64 image

Now you can build arm64 images.

Open the folder containing the image description you want to build,

e.g. /workspace/images/arm64/qemu/ebcl/crinit/debootstrap,

in the integrated terminal in the dev container.

Then run make to build the image.

The build results are stored in a new created build subfolder.

In case of QEMU images, the QEMU VM will be started automatically.

Cross-building

Cross building is supported when the host allows execution of binaries of the target architecture.

To allow executing binaries for different architectures, install binfmt support.

On Ubuntu, you can install it by running: sudo apt install binfmt-support qemu qemu-user-static.

Then you can build the image in the same was as the arm64 images.

Open the image folder in the terminal in the dev container,

e.g. /workspace/images/amd64/qemu/ebcl/crinit/debootstrap.

Then run the image build by executing make in the folder.

Developing images

EB corbos Linux is intended as an embedded Linux distribution build kit, like Yocto. Instead of starting from a pre-defined and pre-configured already integrated image, the user can describe the image needed to solve the problem in an easy, clean and maintainable way, and the EB corbos Linux SDK will build exactly this image. In comparison to Yocto, where all packages are built from scratch, EB corbos Linux is using the packages from the Canonical Ubuntu distribution. This has the advantage that the same binaries are used which run on millions of servers in the cloud, and millions of single board computers. The effort to qualify and security-maintain these packages is shared with all these users. To keep all these advantages, it is mandatory to use the pre-built binaries, and accept the limitations caused by this.

We also know from our experience with automotive ECUs that embedded solutions often have very special needs, and that it may be not possible to stick with the defaults set by Canonical Ubuntu in all cases. For such edge cases, the EBcL SDK provides everything to modify a package, and use the modified variant instead of the default package. If this way is chosen, large parts of the benefits of the Canonical packages are dropped, and a solution specific maintenance and qualification is needed, causing effort over the whole lifetime of the embedded solution.

Customers of EB corbos Linux can order such adaptations, including the qualification and maintenance of the customer specific package, as an add-on to the EB corbos Linux base offer. Using the defaults where possible, and adapt where really needed, delivers the needed flexibility for complex embedded solutions, while minimizing the development, qualification and maintenance efforts.

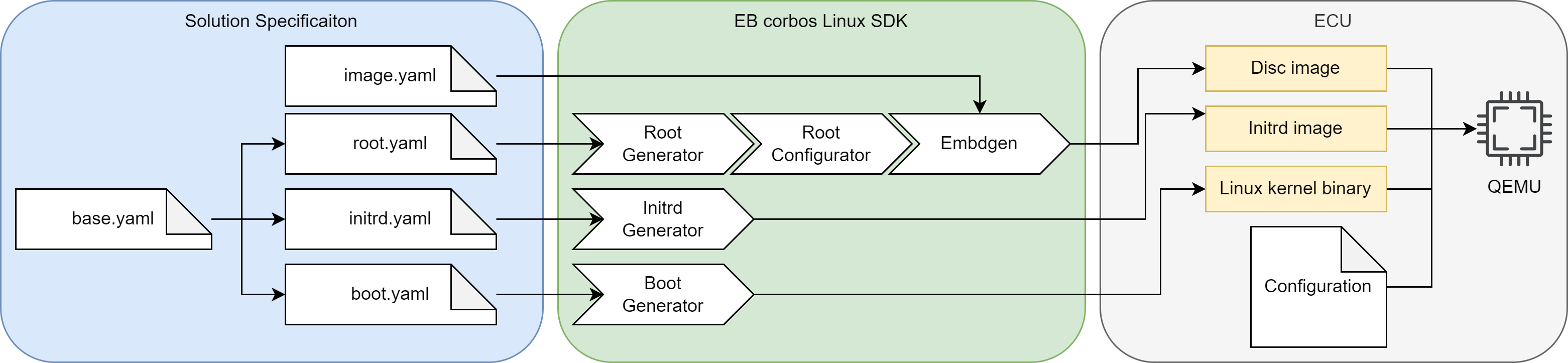

Image concept

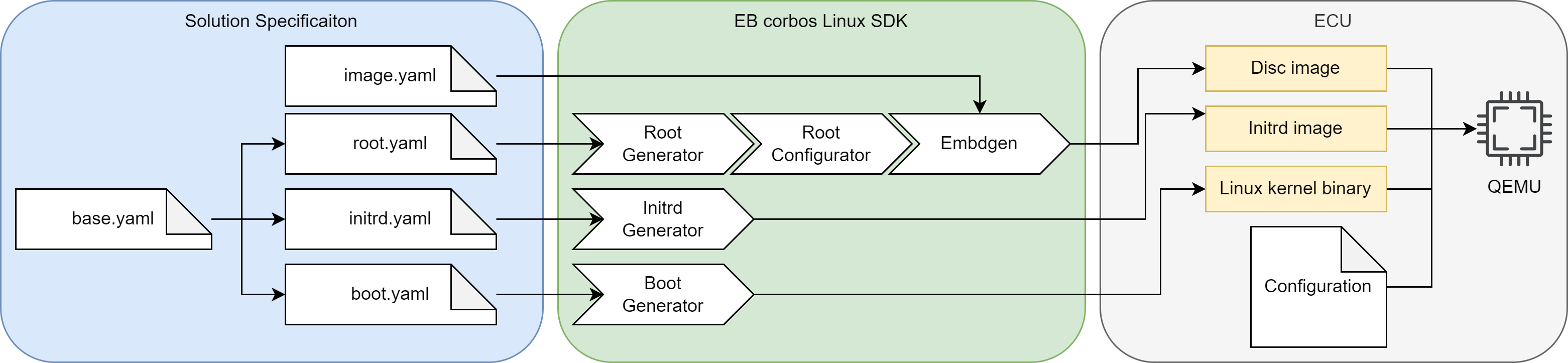

EB corbos Linux is designed to build embedded high-performance controllers. Such systems typically use quite powerful and complex arm64 SoCs, and involve hypervisors, real-time operating systems, trusted execution environments, and a non-trivial boot process involving secure boot. The requirements from the SoC and the bootloaders to the required eMMC storage layout is often complex and quite different between different SoCs. To tackle this challenge, EB corbos Linux considers all the different boxes in the diagram above as separate build artifacts, which can be binary integrated into an overall image as the last build step. These build steps are organized using make, and the EB corbos Linux SDK provides small helper tools to create these artifacts. The integration, if needed, is done using Embdgen, an Elektrobit-launched open-source tool, which is able to create binary images in various formats from different binary artifacts.

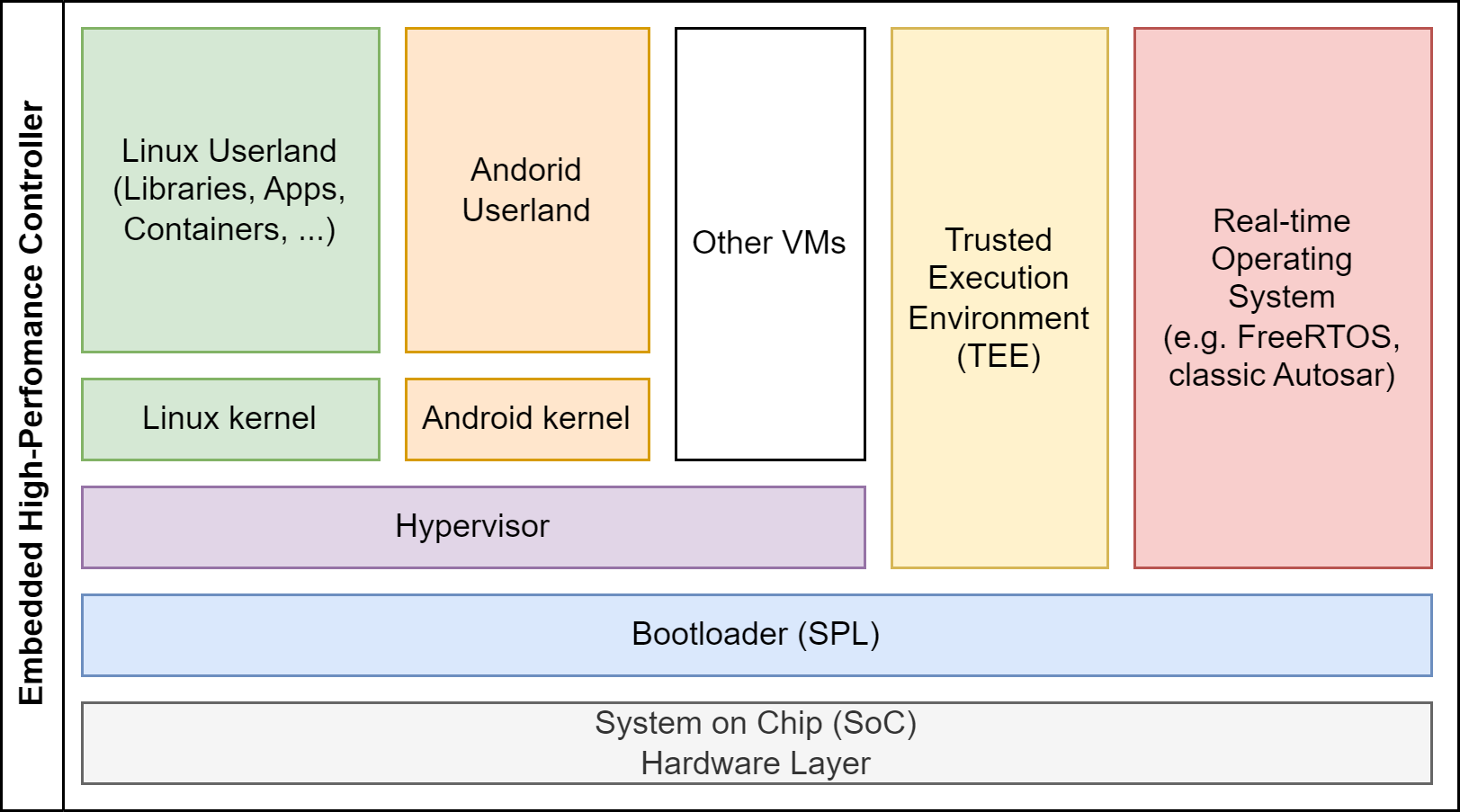

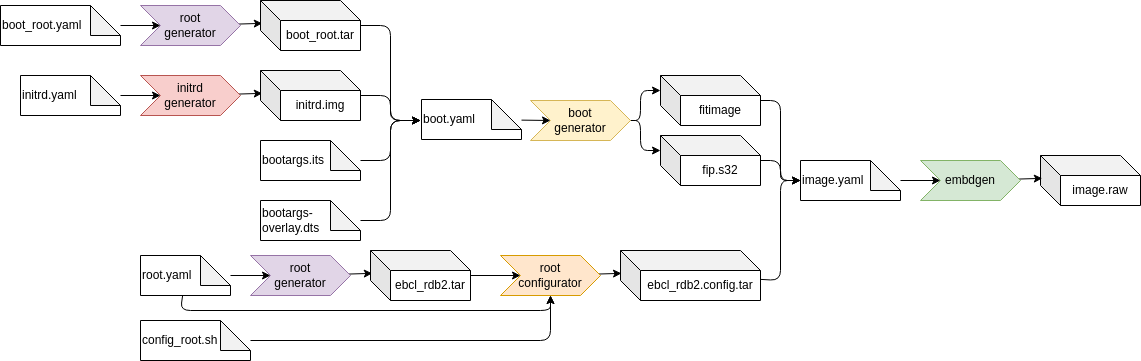

Let’s take a closer look at this build for the very simple QEMU build target. Typically QEMU gets a disc image, a Linux kernel binary and optionally an initrd.img, together with some configuration parameters.

From a run-time point of view, there are dependencies between these three artifacts caused by the used kernel version. The used kernel modules need to fit to the used kernel, and the C library used in the root filesystem must fit to the used kernel interface. From a build-time point of view, and also for our QEMU target point of view, these are three different artifacts. This has an important impact on the development process and workflow. If the initrd behavior shall change, only the initrd image needs to be rebuilt.

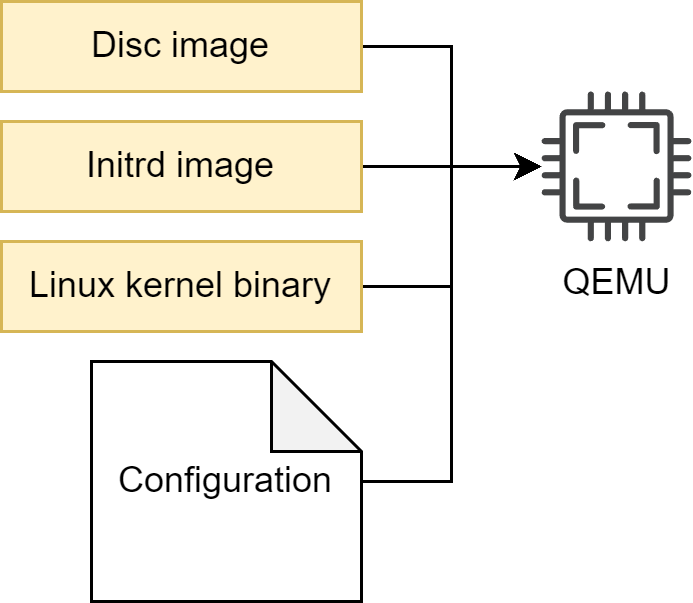

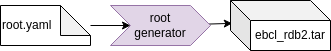

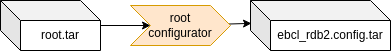

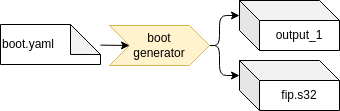

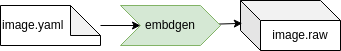

EB corbos Linux makes use of a set of small helper tools to support a flexible build flow and optimized build speed and development experience. These tools read yaml configuration files to specify the generated artifacts. To avoid redundant configuration, these configuration files support hierarchical includes. For the QEMU example the full build flow is:

The image.yaml defines the storage layout, and is used as input for the embdgen integration step. The base.yaml contains the common configuration, like used apt repositories, and is included by the specifications of the different artifacts. The root.yaml describes the root filesystem of the Linux VM. This file system is generated using debootstrap, installing additional required packages, and finally applying solution specific configuration, given as overlay files or scripts. The debootstrap and package installation step is handled by the root generator. This quite time-consuming step only needs to be repeated when the package selection is changed. The root configurator applies the solution specific configuration. The output of these two steps is a tarball of the root filesystem content. Embdgen is used to convert this tarball into a disc image. The initrd.yaml specifies the content of the initrd.img. For QEMU, we need to load the virt-IO block driver, to be able to mount the root filesystem. The boot.yaml specifies the kernel which shall be used, and the boot generator is used to download the right Debian packages and extract the kernel binary. The chaining of these tools is done using a good old makefile.

Image specification

Let’s take a look at this QEMU build flow example in detail and see how the details of this solution are specified and the roles of the different build helper tools.

Let’s look at it from left to right. The base.yaml specifies the common aspects of all the generated artifacts. It configures the kernel package, the used apt repositories and the target CPU architecture.

# Kernel package to use

kernel: linux-generic

# Apt repositories to use

apt_repos:

- apt_repo: http://ports.ubuntu.com/ubuntu-ports

distro: jammy

components:

- main

- universe

- apt_repo: http://ports.ubuntu.com/ubuntu-ports

distro: jammy-security

components:

- main

- universe

# CPU architecture

arch: arm64

The boot.yaml builds on top of the base.yaml. It specifies to download the dependencies of the used kernel package, which is necessary if a meta-package is used, and it specifies that the config* and vmlinuz* files from the boot folder shall be used as results. The tar flag specifies that the results shall not be bundled as a tarball, but instead directly copied to the output folder.

# Derive values from base.yaml - relative path

base: base.yaml

# Download dependencies of the kernel package - necessary if meta-package is specified

download_deps: true

# Files to copy from the packages

files:

- boot/vmlinuz*

- boot/config*

# Do not pack the files as tar - we need to provide the kernel binary to QEMU

tar: false

The boot generator reads this configuration, and the base.yaml, downloads and extracts the package linux-generic and its dependencies to a temporary folder, and copies the kernel binary and kernel configuration to the given output folder. In general, the boot generator is the tool to automate the build steps of the boot artifacts, like kernel collection and generation of SoC specific binary artifacts.

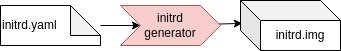

Let’s now take a look at the initrd.img generation. The initrd images created by the tooling from the server and desktop world are very flexible and complete from a feature point of view, but completely bloated from an embedded point of view. Since we know our target hardware and software in detail, we don’t need flexibility, but typically we want to have the best startup performance we can squeeze out of the used hardware. The initrd generator is a small helper tool to build a minimal initrd.img, to get the best possible startup performance. It also helps to fast and easily customize the initrd content, e.g. for implementing a secure boot solution.

# Derive values from base.yaml - relative path

base: base.yaml

# Root device to mount

root_device: /dev/vda1

# List of kernel modules

modules:

- kernel/drivers/block/virtio_blk.ko # virtio_blk is needed for QEMU

The initrd specification also derives the values from the base.yaml, and specifies that the /dev/vda1 shall be used as device for the root filesystem. Since the Canonical default kernel has no built-in support for virt-IO block devices, we have to load this driver in the initrd.img, to be able to mount the root filesystem. This is done by specifying the kernel module in the modules list. Because of this line, the initrd generator downloads and extracts the specified kernel package and its dependencies, detects the kernel version, gets the right module, adds it to the initrd.img, and loads it before mounting the root filesystem. How this works in detail will be described in the later chapters.

# Derive the base configuration

base: base.yaml

# Reset the kernel - should not be installed

kernel: null

# Name of the archive.

name: ubuntu

# Packages to install in the root tarball

packages:

- systemd

- udev # udev will create the device node for ttyS0

- util-linux

# Scripts to configure the root tarball

scripts:

- name: config_root.sh # Name of the script, relative path to this file

env: chroot # Type of execution environment

The last missing artifact is our root filesystem. The root.yaml describes the used root filesystem. It doesn’t need to contain a kernel, since the kernel is provided separately to QEMU. For Debian based distributions, a minimal set of required packages are specified by the used base distribution, in our case Ubuntu Jammy. These packages are installed automatically, and we only need to specify what we want to have on top. In this case, it is systemd as init manager, udev to create the device nodes, and util-linux to provide the basic CLI tools. In addition, a config script is specified which adapts the configuration to our needs. This script is executed in a chroot environment. The name is used as the name for the resulting tarball of the root filesystem.

The build flow is using the root generator and the root configurator to separate the installation and configuration steps. The installation step takes much longer than the configuration step, and it only needs to be repeated when the package selection was adapted. This separation allows a fast iterative configuration of the root filesystem.

The last step is to convert the configured root tarball into a disc image. The storage layout is specified in the image.yaml, and is picked up by embdgen. For the QEMU image we use a simple gpt partition table based image with only one partition. This partition is using the ext4 file format, has a size of 2 GB, and is filled with the contents of our root tarball.

# Partition layout of the image

# For more details see https://elektrobit.github.io/embdgen/index.html

image:

type: gpt

boot_partition: root

parts:

- name: root

type: partition

fstype: ext4

size: 2 GB

content:

type: ext4

content:

type: archive

archive: build/ubuntu.config.tar

All together, we have a complete specification of our embedded solution, targeting QEMU as our virtual hardware.

Configuration parameters

The following list gives an overview of the supported configuration parameters for the EB corbos Linux build helper tools. In the round brackets it is noted for which files which option is applicable. Embdgen is developed separately, and the details and options for the storage specification is documented in the embdgen documentation.

-

base (boot/initrd/root/config) [default: None ]: Parent configuration file. If specified, the values from the parent file will be used if not otherwise specified in the current file.

-

arch (boot/initrd/root) [default: arm64 ]: The CPU architecture of the target hardware. The supported values are arm64, amd64 and armhf.

-

use_fakeroot (boot/initrd/root/config) [default: False ]: Use fakeroot in the generator tools where possible, instead of sudo and chroot. This may cause issues for edge-cases.

-

apt_repos (boot/initrd/root) [default: None ]: A list of apt repositories to download the required Debian packages. Example:

apt_repos:

- apt_repo: http://archive.ubuntu.com/ubuntu

distro: jammy

components:

- main

- universe

- apt_repo: http://archive.ubuntu.com/ubuntu

distro: jammy-security

components:

- main

- universe

In addition, an armored public key file or URL can be given as “key”, and a unarmored gpg file can be given as “gpg”, to authenticate the package sources.

-

use_ebcl_apt (boot/initrd/root) [default: No ]: If yes, the public apt repository of the EB corbos Linux will be added. By default, the latest release will be used if the ebcl_version parameter is not given. This is a convenience feature, but be aware that this public apt repository doesn’t provide customer specific or proprietary packages.

-

ebcl_version (boot/initrd/root) [default: latest release ]: EB corbos Linux release version, for the automatically generated apt repository.

-

host_files (boot/initrd/root) [default: None ]: Files to include from the host or container environment. Example:

host_files:

- source: bootargs-overlay.dts

destination: boot

- source: bootargs.its

destination: boot

The destination is the path in the target root filesystem or chroot environment. In addition, the parameters “mode”, to specify the mode of the file, “uid”, to specify the owner of the file, and “gid”, to specify the owning group of the file, can be used.

- files (boot) [default: None ]: Files to get as result from the chroot environment. Example:

files:

- boot/vmlinuz*

- boot/config*

These files can be part of an extracted Debian package, or result of a script executed in the chroot environment.

- scripts (boot/initrd/root/config) [default: None ]: The scripts which shall be executed.

scripts:

- name: config_root.sh

env: chroot

The supported environments are “chroot”, to run the script in a chroot environment, “fake”, to run the script in a fakeroot environment, “sudo” to run the script with root privileges, or “shell” to run the script in a plain shell environment. For “chroot” the script will be placed at “/” and executed from this folder. For all other environments, the current work directory will be the folder containing the target environment. In addition, parameters which are forwarded to the script can be provided as “params”.

-

template (initrd/root) [default: None ]: A Jinja2 template to create a configuration. In case of the initrd generator, a template for the init script can be provided. In case of the root generator, a template for the kiwi-ng XML image specification can be provided.

-

name (boot/initrd/root) [default: None ]: A name which is used in the filenames of the generated artifacts.

-

download_deps (boot/initrd) [default: True ]: Download the dependencies of the specified packages. This parameter must be True, to use e.g. a meta-package for the kernel binary and modules.

-

base_tarball (boot/initrd) [default: None ]: A base chroot environment for generating the boot artifacts and for the initrd.img. If no base chroot environment is given, a minimal busybox based environment will be used.

-

packages (boot/initrd/root/config) [default: None ]: A list of packages. For the root generator, these packages are installed in the base debootstrap environment. For the initrd generator, these packages will be downloaded, extracted and integrated into the resulting initrd.img. For the boot generator, these packages will be downloaded and extracted to get the kernel binary.

-

kernel (boot/initrd/root) [default: None ]: Name of the kernel package. For the initrd generator, these packages will be downloaded and extracted to a temporary folder to get the required kernel modules.

-

tar (boot) [default: True ]: Flag for packing the boot artifacts as a tarball. If embdgen is used to write the artifacts to an image, this will preserve the owner and mode of the artifacts.

-

busybox (initrd) [default: busybox-static ]: Name of the busybox package for the minimal busybox environment.

-

modules (initrd) [default: None ]: List of kernel modules to add and load from the initrd.img. Example:

modules:

- kernel/drivers/virtio/virtio.ko

- kernel/drivers/virtio/virtio_ring.ko

- kernel/drivers/block/virtio_blk.ko

- kernel/net/core/failover.ko

- kernel/drivers/net/net_failover.ko

- kernel/drivers/net/virtio_net.ko

-

root_device (initrd) [default: None ]: Name of the root device to mount.

-

devices (initrd) [default: None ]: List of device nodes to add. Example:

devices:

- name: mmcblk1

type: block

major: 8

minor: 0

- name: console

type: char

major: 5

minor: 1

In addition, the parameters “mode”, to specify the mode of the device node, “uid”, to specify the owner of the device node, and “gid”, to specify the owning group of the device node, can be used.

-

kernel_version (initrd) [default: auto detected ]: The kernel version of the copied modules.

-

modules_folder (initrd) [default: None ]: A folder in the host or container environment containing the kernel modules. This can be used to provide modules from a local kernel build. Example:

modules_folder: $$RESULTS$$

The string $$RESULTS$$ will be replaced with the path to the output folder, for all paths given in yaml config files of the build tools.

-

result_pattern (root) [default: auto detected ]: A name pattern to match the build result, e.g. *.tar.xz for kiwi-ng tbz builds.

-

image (boot/initrd/root/config) [default: None ]: A kiwi-ng XML image description. This parameter can be used to integrate old image descriptions into new build flows.

-

berrymill_conf (root) [default: None ]: A berrymill.conf used for berrymill build. If none is given, the configuration will be automatically generated using the provided apt repositories. This parameter can be used to integrate old image descriptions into new build flows.

-

use_berrymill (root) [default: True ]: Flag to use berrymill for kiwi-ng build. If this flag is set to false, kiwi-ng will be called without the berrymill wrapper.

-

use_bootstrap_package (root) [default: True ]: Flag if a bootstrap package shall be used for kiwi-ng builds. If this flag is set to True, one of the specified repositories needs to provide the bootstrap package.

-

bootstrap_package (root) [default: bootstrap-root-ubuntu-jammy ]: Name of the bootstrap package for the kiwi-ng build.

-

bootstrap (root) [default: None ]: List of additional bootstrap packages for the kiwi-ng build.

-

kiwi_root_overlays (root) [default: None ]: List of root overlay folders for the kiwi-ng build.

-

use_kiwi_defaults (root) [default: True ]: If this flag is true, the “root” folder and the kiwi-ng config scripts next to the appliance.kiwi, will be provided to kiwi-ng.

-

kiwi_scripts (root) [default: None ]: List of additional scripts which will be provided to kiwi-ng during the build.

-

kvm (root) [default: True ]: Flag if KVM acceleration shall be used for kiwi-ng builds.

-

image_version (root) [default: 1.0.0 ]: Image version for the generated kiwi-ng image description.

-

type (root) [default: debootstrap ]: Type of the root filesystem generator to use. The supported generators are “debootstrap” and “kiwi”.

-

primary_repo (root) [default: auto selected Ubuntu Jammy repository ]: The primary apt repository for the debootstrap or kiwi-ng build. The main component of this repository is used for debootstrap.

-

primary_distro (root) [default: jammy ]: The name of the distribution used for debootstrap.

-

root_password (root) [default: linux ]: The root password of the generated root filesystem.

-

hostname (root) [default: ebcl ]: The hostname of the generated root filesystem.

-

domain (root) [default: elektrobit.com ]: The domain name of the generated root filesystem.

-

console (root) [default: auto configured ]: The console parameter of the generated root filesystem. If none is given, “ttyS0,115200” is used for amd64, and “ttyAMA0,115200” is used for amd64.

-

sysroot_packages (boot/initrd/root/config) [default: None ]: List of additional packages which shall be installed for sysroot builds. This can be used to add additional development headers.

-

sysroot_defaults (boot/initrd/root/config) [default: True ]: Flag if the default additional packages for sysroot builds shall be added. If yes, in addition to the specified packages the packages “build-essential” and “g++” will be added.

Building an image from scratch

Let’s develop a new EB corbos Linux image step by step, for the NXP RDB2 board using the NXP S32G2 SoC. According to the NXP S32G2 user manual, the following bootloader layout is required:

The space between 0x0 and 0x1d_3000 is occupied by some or all of the following components: IVT, QSPI Parameters, DCD, HSE_FW, SYS_IMG, Application Boot Code Header, TF-A FIP image. The actual layout is determined at boot time and can be obtained from the arm-trusted-firmware.

IVT: Offset: 0x1000 Size: 0x100 AppBootCode Header: Offset: 0x1200 Size: 0x40 U-Boot/FIP: Offset: 0x1240 Size: 0x3d400 U-Boot Environment: Offset: 0x1e0000 Size: 0x2000

For SD/eMMC the partitioned space begins at 0x1d_3000.

For our SD card image, this means, the first 256B of the FIP image containing the ATF and the U-Boot needs to be written to block 0, then a gap of 0x2000 B is required, at position 0x1e0000 B, for the U-Boot env, and then the remaining part of the ATF and U-Boot image can be written. The partition table and partitions come afterwards.

Further the user manual describes that the kernel can be provided as a FIT image, and one way to provide this FIT image is to put it on the first partition, which has to be FAT32, using the name fitimage.

All these requirements can be fulfilled with the following embdgen image description:

# Partition layout of the image

# For more details see https://elektrobit.github.io/embdgen/index.html

image:

type: mbr

boot_partition: boot

parts:

- name: u-boot part 1

type: raw

start: 0

size: 256 B

content:

type: raw

file: out/fip.s32

- name: u-boot part 2

type: raw

start: 512 B

content:

type: raw

file: out/fip.s32

offset: 512 B

- name: uboot.env

type: empty

start: 0x1e0000 B

size: 0x2000 B

- name: boot

type: partition

fstype: fat32

content:

type: fat32

content:

type: files

files:

- out/fitimage

size: 100 MB

- name: root

type: partition

fstype: ext4

size: 2 GB

content:

type: ext4

content:

type: archive

archive: out/ebcl_rdb2.config.tar

You may notice that this image description requires three artifacts:

-

fip.s32: This is the binary image containing the arm trusted firmware (ATF) and the U-Boot bootloader.

-

fitimage: This is the binary flattened image tree (FIT) containing the kernel and device tree.

-

ebcl_rdb2.config.tar: This is a tarball containing the contents of our Linux root filesystem.

Since the NXP S32G2 SoC is supported by EB corbos Linux, a FIP image and a kernel binary is provided as part of the releases and free download. The fip.s32 image is contained in the Debian package arm-trusted-firmware-s32g, and provided on https://linux.elektrobit.com/eb-corbos-linux/1.2 as part of the distribution ebcl_nxp_public in the component nxp_public. The kernel binary and modules are provided by the same distro and component, packaged as linux-image-unsigned-5.15.0-1034-s32-eb-optimized, linux-modules-5.15.0-1034-s32-eb-optimized and linux-modules-extra-5.15.0-1034-s32-eb-optimized.

The tooling to build the fitimage is contained in the packages u-boot-s32-tools, arm-trusted-firmware-s32g, device-tree-compiler, and nautilos-uboot-tools. We need to install these tools in some environment to be able to build the fitimage. Adding them to the root filesystem would be a possibility, but not a good one, since this would bloat the root filesystem and also gives very useful tools to an attacker trying to hack our embedded solution. Since the tooling is only needed during build time, a better approach is to install it in a separate environment. This could be our build host, but since we want reproducible builds, the better solution is to use the root generator to define and create a well specified chroot build environment.

Let’s first define some common settings used by our image overall, as base.yaml:

# Kernel package to use

kernel: linux-image-unsigned-5.15.0-1034-s32-eb-optimized

# CPU architecture

arch: arm64

# Add the EB corbos Linux apt repo

use_ebcl_apt: true

# Add repo with NXP RDB2 packages

apt_repos:

- apt_repo: https://linux.elektrobit.com/eb-corbos-linux/1.4

distro: ebcl_nxp_public

components:

- nxp_public

key: file:///build/keys/elektrobit.pub

gpg: /etc/berrymill/keyrings.d/elektrobit.gpg

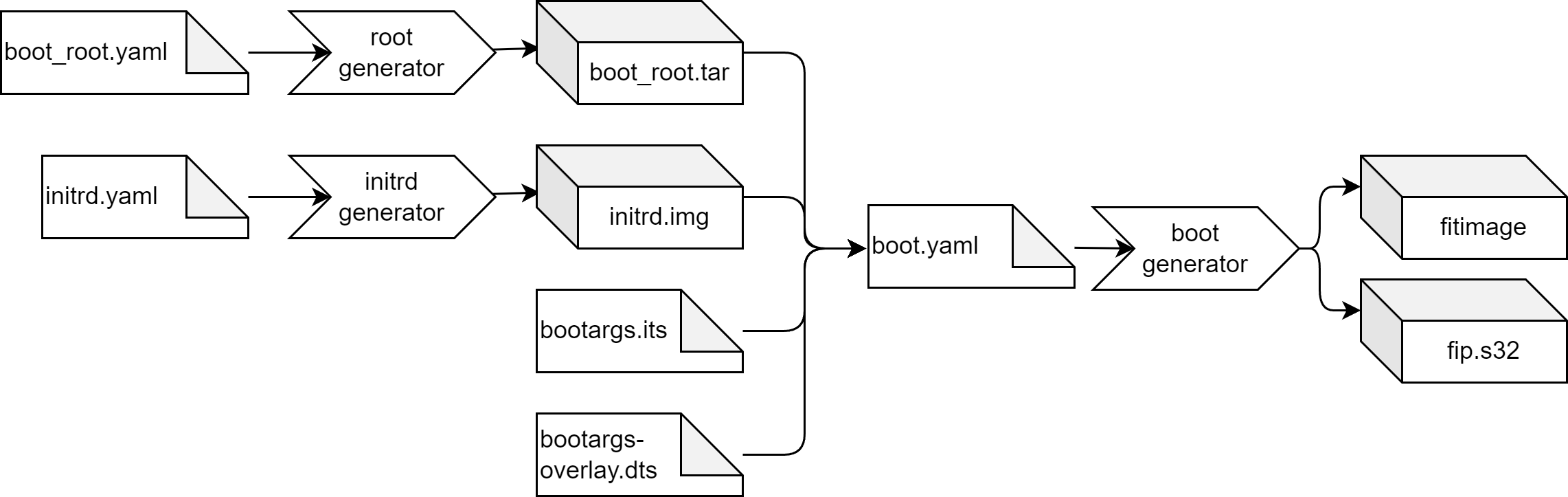

This base.yaml states that we want to use the kernel package linux-image-unsigned-5.15.0-1034-s32-eb-optimized, build an arm64 image, and make use of the default EBcL apt repository, and the EBcL NXP additions. Now we can base on this file and define our fitimage build environment as boot_root.yaml:

# Derive values from base.yaml - relative path

base: base.yaml

# Name of the boot root archive

name: boot_root

# Packages for boot_root.tar

packages:

- linux-image-unsigned-5.15.0-1034-s32-eb-optimized

- linux-modules-5.15.0-1034-s32-eb-optimized

- linux-modules-extra-5.15.0-1034-s32-eb-optimized

- u-boot-s32-tools

- arm-trusted-firmware-s32g

- device-tree-compiler

- nautilos-uboot-tools

We install all the above mentioned packages into this environment. For building the fitimage, and for extracting the fip.s32, we can make use of the boot generator:

# Derive values from base.yaml - relative path

base: base.yaml

# Reset the kernel value - we don't want to download and extract it

kernel: null

# Do not pack the files as tar

tar: false

# do not download and extract these packages, they are already installed in the boot_root.tar

use_packages: false

# Name of the boot root archive

base_tarball: $$RESULTS$$/boot_root.tar

# Files to copy form the host environment

host_files:

- source: bootargs-overlay.dts

destination: boot

- source: bootargs.its

destination: boot

- source: $$RESULTS$$/initrd.img

destination: boot

base_dir: .

# Scripts to build the fitimage and fip.s32

scripts:

- name: build_fitimage.sh # Build the fitimage in the boot_root.tar environment

env: chroot

# Files to copy to the build folder

files:

- boot/fip.s32

- boot/fitimage

The kernel is already part of the chroot tarball environment, and we don’t need to download it again.

We need to provide the fitimage and fip.s32 binaries directly to embdgen, so we don’t want to pack it.

The tarball created by the root generator will be named “boot_root.tar”, because of the name given in the boot_root.yaml. Because of the “base_tarball” parameter, the boot generator will pick up the tarball, extract it and chroot into this environment.

The boot generator will also replace the string $$RESULTS$$ with the path to the given output folder.

In addition, we need the files “bootargs-overlay.dts”, “bootargs.its” and $$RESULTS$$/initrd.img in the host environment.

These files will be copied into the chroot environment and used for building the fitimage.

The script build_fitimage.sh implements the fitimage building.

When this script has done its job, the files fip.s32 and fitimage will be copied to the output folder.

To use this recipe, we first need the input artifacts. The bootargs.its is the fitimage description we need to provide. The following description will do the job:

/dts-v1/;

/ {

description = "BaseOS Boot Image";

#address-cells = <1>;

images {

kernel-1 {

description = "Linux kernel image";

data = /incbin/("Image");

type = "kernel";

arch = "arm64";

os = "linux";

compression = "none";

load = <0x80000000>;

entry = <0x80000000>;

hash-1 { algo = "md5"; };

};

fdt-1 {

description = "Flattened device tree blob";

data = /incbin/("target.dtb");

type = "flat_dt";

arch = "arm64";

compression = "none";

hash-1 { algo = "md5"; };

};

ramdisk-1 {

description = "Initial ramdisk image";

data = /incbin/("initrd");

type = "ramdisk";

arch = "arm64";

os = "linux";

compression = "gzip";

load = <0x90000000>;

entry = <0x90000000>;

hash-1 { algo = "md5"; };

};

};

configurations {

default = "config-1";

config-1 {

description = "Default boot config";

kernel = "kernel-1";

ramdisk = "ramdisk-1";

fdt = "fdt-1";

};

};

};

It describes a fitimage consisting of a kernel binary, a device tree and an initrd.img.

The bootargs-overlay.dts is the U-Boot configuration:

/dts-v1/;

/plugin/;

&{/chosen} {

bootargs = "console=ttyLF0,115200 earlycon nohz=off coherent_pool=64M root=/dev/mmcblk0p2 selinux=0 rw";

};

The initrd.img is the initial ramdisk we want to use. We can use the initrd generator to create such an initrd.img which fits our needs. As long as we don’t want to implement secure boot, your needs are quite small. We just want to use /dev/mmcblk0p2 as root partition, which is partition two of the internal eMMC storage.

# Derive values from base.yaml - relative path

base: base.yaml

# Reset the kernel value - we don't want to download and extract it

kernel: null

# Root device to mount

root_device: /dev/mmcblk0p2

Running the initrd generator with this spec will create us a minimal initrd.img.

The final missing input is the script to generate the fitimage. We can use the following script:

#!/bin/sh

set -e

#======================

# Get NXP S32G ATF (secure boot image)

#---------------------------------------

cp /usr/lib/arm-trusted-firmware-s32g/s32g274ardb2/fip.s32 \

/boot/fip.s32

#======================

# Rename kernel

#---------------------------------------

echo "Rename kernel..."

if [ ! -f /boot/vmlinuz ]; then

mv /boot/vmlinuz-* /boot/Image

fi

mv /boot/vmlinuz /boot/Image

mv /boot/initrd.img /boot/initrd

#======================

# Get NXP S32G device tree

#---------------------------------------

cp /lib/firmware/*/device-tree/freescale/s32g274a-rdb2.dtb \

/boot/fsl-s32g274a-rdb2.dtb

#======================

# Create fit image

#---------------------------------------

cd /boot

dtc -I dts -O dtb -o bootargs-overlay.dtbo bootargs-overlay.dts

fdtoverlay -i fsl-s32g274a-rdb2.dtb -o target.dtb bootargs-overlay.dtbo

ls -lah bootargs-overlay.dtbo

mkimage -f bootargs.its fitimage

Now we are prepared to build our fitimage, and get the fip.s32 binary.

We can build the boot_root.tar using the command root_generator boot_root.yaml ./out, then we can build the initrd.img using the command initrd_generator initrd.yaml ./out, and finally we can build the fitimage using the command boot_generator boot.yaml ./out.

To avoid typing all these commands by hand, we can use make. The following Makefile will do the job:

#--------------

# Result folder

#--------------

result_folder ?= ./out

#---------------------

# Select bash as shell

#---------------------

SHELL := /bin/bash

#---------------------

# Image specifications

#---------------------

partition_layout ?= image.yaml

initrd_spec ?= initrd.yaml

boot_root_spec ?= boot_root.yaml

boot_spec ?= boot.yaml

#-------------------------

# Additional configuration

#-------------------------

# Build script for the fitimage

build_fitimage ?= build_fitimage.sh

# Layout of the fitimage

fitimage_config ?= bootargs.its

# NXP bootloader config

bootloader_config ?= bootargs-overlay.dts

#--------------------

# Generated artifacts

#--------------------

# Disc image

disc_image ?= $(result_folder)/image.raw

# Boot root tarball

boot_root ?= $(result_folder)/boot_root.tar

# Disc image

fitimage ?= $(result_folder)/fitimage

# Generated initrd.img

initrd_img ?= $(result_folder)/initrd.img

#--------------------------

# Image build configuration

#--------------------------

# The initrd image is built using the initrd generator.

# initrd_spec: specification of the initrd image.

$(initrd_img): $(initrd_spec)

@echo "Build initrd.img..."

mkdir -p $(result_folder)

set -o pipefail && initrd_generator $(initrd_spec) $(result_folder) 2>&1 | tee $(initrd_img).log

# The root generator is used to build a sysroot variant of the root filesystem.

# root_filesystem_spec: specification of the root filesystem

#

# --no-config means that the configuration step is skipped

$(sysroot_tarball): $(root_filesystem_spec)

@echo "Build sysroot.tar..."

mkdir -p $(result_folder)

set -o pipefail && root_generator --sysroot --no-config $(root_filesystem_spec) $(result_folder) 2>&1 | tee $(sysroot_tarball).log

# The root generator is used to build a chroot environment which contains all tools for building the fitimage.

# boot_root_spec: specification of the fitimage build environment

$(boot_root): $(boot_root_spec)

@echo "Build $(boot_root) from $(boot_root_spec)..."

mkdir -p $(result_folder)

set -o pipefail && root_generator --no-config $(boot_root_spec) $(result_folder) 2>&1 | tee $(boot_root).log

# The boot generator is used to run the fitimage build in a chroot environment.

# boot_spec: spec of the fitimage build environment

# boot_root: tarball of the fitimage build environment

# build_fitimage: build script for the fitimage

# fitimage_config: fitimage layout configuration

# fitimage_config: bootloader configuration

# initrd_img: the initrd.img which is embedded in the fitimage

# initrd_spec: the initrd.img specification

$(fitimage): $(boot_spec) $(boot_root) $(build_fitimage) $(fitimage_config) $(fitimage_config) $(initrd_img)

@echo "Build $(fitimage)..."

mkdir -p $(result_folder)

set -o pipefail && boot_generator $(boot_spec) $(result_folder) 2>&1 | tee $(fitimage).log

#--------------------------------

# Default make targets for images

#--------------------------------

# build of the initrd.img(s)

.PHONY: initrd

initrd: $(initrd_img)

# build of the fitimage

.PHONY: boot

boot: $(fitimage)

# build of the fitimage build env

.PHONY: boot_root

boot_root: $(boot_root)

# clean - delete the generated artifacts

.PHONY: clean

clean:

rm -rf $(result_folder)

Now the board specific parts are done, and the only missing piece to build the image is the root filesystem. A minimal root filesystem making use of the systemd init manager can be specified as:

base: base.yaml

name: ebcl_rdb2

type: debootstrap

packages:

- systemd

- udev

- util-linux

# Scripts to configure the root tarball

scripts:

- name: config_root.sh # Name of the script, relative path to this file

env: fake

The config_root.sh script is needed to link systemd as /sbin/init.

#!/bin/sh

# Link systemd as init

ln -s /usr/lib/systemd/systemd ./sbin/init

To build the root filesystem tarball, we can run root_generator root.yaml ./out, or we extend our Makefile.

#---------------------

# Image specifications

#---------------------

# Specification of the root filesystem content and configuration

root_filesystem_spec ?= root.yaml

#-------------------------

# Additional configuration

#-------------------------

# Config script for root filesystem

config_root ?= config_root.sh

#--------------------

# Generated artifacts

#--------------------

# Base root tarball

base_tarball ?= $(result_folder)/ebcl_rdb2.tar

# Configured root tarball

root_tarball ?= $(result_folder)/ebcl_rdb2.config.tar

#--------------------------

# Image build configuration

#--------------------------

# The root generator is used to build the base root filesystem tarball.

# root_filesystem_spec: specification of the root filesystem packages.

#

# This first step only installs the specified packages.

User configuration

# is done as a second step, because the build of this tarball is quite

# time consuming and configuration is fast.

This is an optimization for

# the image development process.

$(base_tarball): $(root_filesystem_spec)

@echo "Build root.tar..."

mkdir -p $(result_folder)

set -o pipefail && root_generator --no-config $(root_filesystem_spec) $(result_folder) 2>&1 | tee $(base_tarball).log

# The root configurator is used to run the user configuration scripts

# as a separate step in the build process.

# base_tarball: tarball which is configured

# config_root: the used configuration script

$(root_tarball): $(base_tarball) $(config_root)

@echo "Configuring ${base_tarball} as ${root_tarball}..."

mkdir -p $(result_folder)

set -o pipefail && root_configurator $(root_filesystem_spec) $(base_tarball) $(root_tarball) 2>&1 | tee $(root_tarball).log

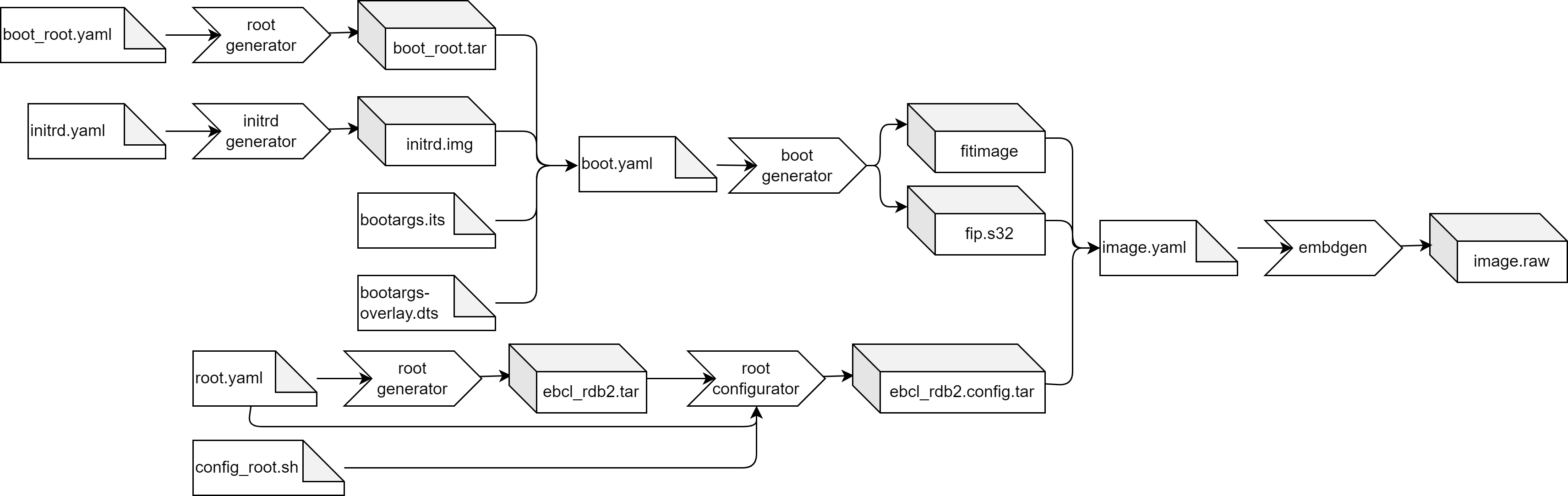

The above makefile splits the image installation and the configuration step of building the root tarball. This is useful if you expect changes for the configuration, because the installation step is quite time consuming, and the configuration step is quite fast. This optimization can save you a lot of build time.

Finally we need to run embdgen to build our binary image.

This can be done manually running embdgen image.yaml ./out, but we can also add it to our Makefile.

#---------------------

# Image specifications

#---------------------

# Specification of the partition layout of the image.raw

partition_layout ?= image.yaml

#--------------------

# Generated artifacts

#--------------------

# Disc image

disc_image ?= $(result_folder)/image.raw

#--------------------------

# Image build configuration

#--------------------------

# Embdgen is used to build the SD card image.

# fitimage: the fitimage containing the kernel, the device tree and the initrd.img

# root_tarball: the contents of the root filesystem

# partition_layout: the partition layout of the SD card image

#

# The bootloader fip.s32 is not explicitly mentioned, since it is built in one step

# with the fitimage.

$(disc_image): $(fitimage) $(root_tarball) $(partition_layout)

@echo "Build image..."

mkdir -p $(result_folder)

set -o pipefail && embdgen -o ./$(disc_image) $(partition_layout) 2>&1 | tee $(disc_image).log

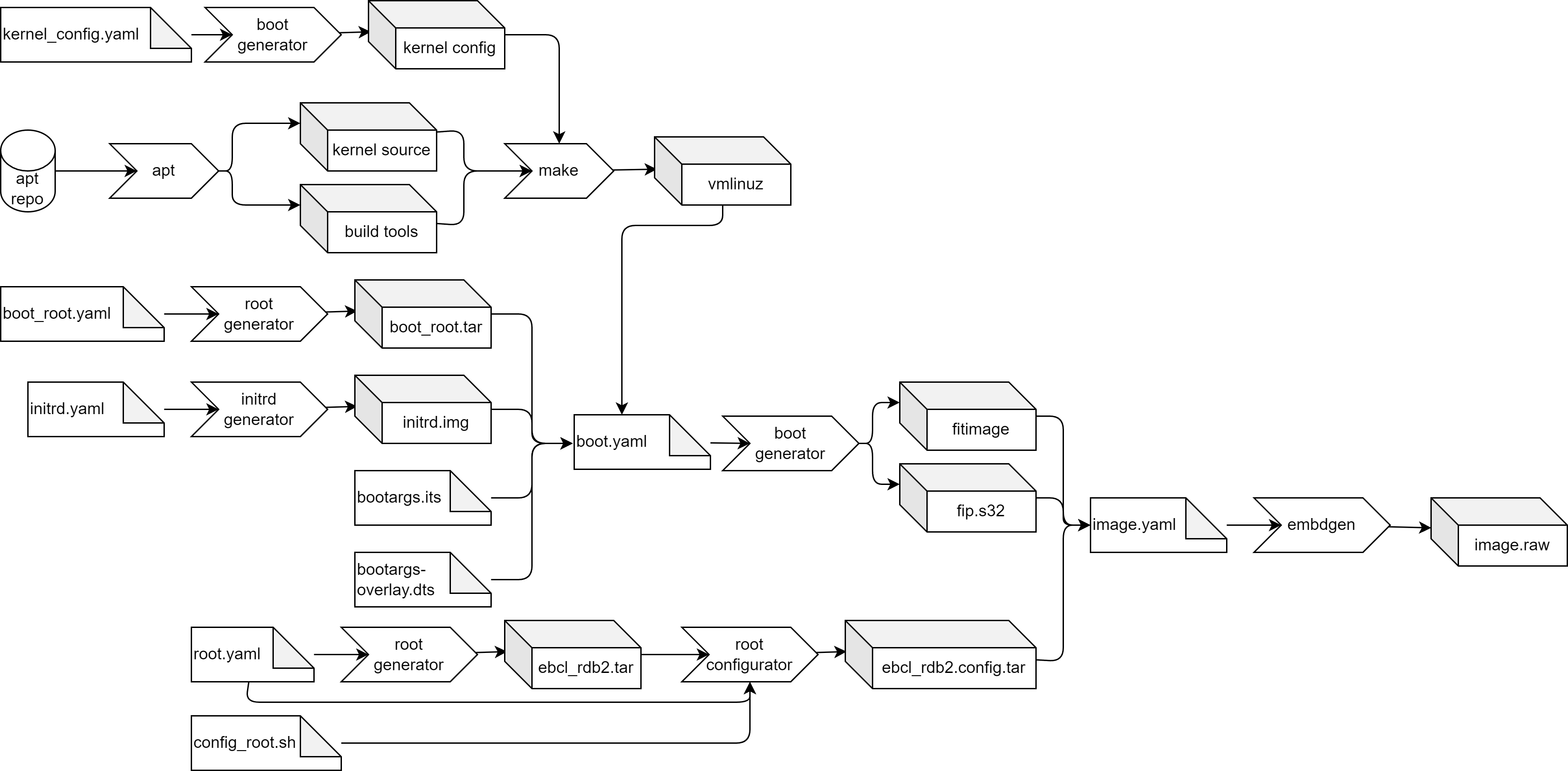

Now you have an image which you can flash to your NXP RDB2 board. The overall build flow with the changes above is:

As described in the previous chapters, a EB corbos Linux image typically consists of a Makefile and specification yaml files.

The Makefile is SoC specific, should be only changed during SoC and board bring-up, and can be shared with all images for the SoC.

If different image variants are used, also parts of the image configuration can be shared.

To avoid redundancy, the example images make use of this sharing.

The example images are contained in the images folder of the EB corbos Linux template workspace, and are structured by CPU architecture, distribution, init-manager and further variant descriptions.

The example image for amd64 and the QEMU target, using the EBcL distribution, and the crinit init-manager is contained in images/amd64/qemu/ebcl/crinit, and you can build and run it by executing make in this folder.

Please be aware that the example images are only considered for educational purposes. These images are not pre-qualified. If you are an EB corbos Linux customer, and want to start a new industrial embedded Linux project which requires qualification and maintenance, please choose one of the provided reference images as a starting point. These images are already pre-qualified and get up to 15 years of maintenance.

The amd64 images

EB corbos Linux doesn’t support any amd64 hardware at the moment, but we provide some QEMU amd64 images. Using amd64 for development may help to make your development flow much smoother since you don’t have to handle the tricky aspects of cross-building.

For amd64/qemu we provide example images for EB corbos Linux (EBcL) and for Ubuntu Jammy. The difference between EBcl and Jammy is, that EBcL provides some additional components, like the crinit init-manager and the elos logging and event framework, and that EBcL provides a qualified security maintenance release every three months, while Jammy is proving updates continuously, using less strict qualification and documentation. Additionally there is an example image provided for application development. You can find more about application development in later chapters.

The amd64 Jammy image

In images/amd64/qemu/jammy you can find a basic example image demonstrating how to use the EB corbos Linux SDK. This folder contains the configuration and makes use of the QEMU images/qemu*.mk include makefiles.

# Kernel package to use

kernel: linux-image-generic

# Apt repositories to use

apt_repos:

- apt_repo: http://archive.ubuntu.com/ubuntu

distro: jammy

components:

- main

- universe

- apt_repo: http://archive.ubuntu.com/ubuntu

distro: jammy-security

components:

- main

- universe

# CPU architecture

arch: 'amd64'

The example makes use of the kernel “linux-image-generic”. This is a meta-package and always takes the latest available Ubuntu Jammy package. The Canonical Ubuntu apt repositories are used to build the example.

# Partition layout of the image

# For more details see https://elektrobit.github.io/embdgen/index.html

image:

type: gpt

boot_partition: root

parts:

- name: root

type: partition

fstype: ext4

size: 2 GB

content:

type: ext4

content:

type: archive

archive: build/ubuntu.config.tar

The example make use of a very simple image consisting of a gpt partition table and a single ext4 root partition with a size of 2 GB.

# Derive values from base.yaml - relative path

base: base.yaml

# Root device to mount

root_device: /dev/vda1

# List of kernel modules

modules:

- kernel/drivers/block/virtio_blk.ko # virtio_blk is needed for QEMU

- kernel/fs/autofs/autofs4.ko # Wanted by systemd

The initrd.img loads the virt-IO block and the autofs4 drivers and then mounts /dev/vda1 as the root filesystem.

# Derive values from base.yaml - relative path

base: base.yaml

# Download dependencies of the kernel package - necessary if meta-package is specified

download_deps: true

# Files to copy from the packages

files:

- boot/vmlinuz*

- boot/config*

# Do not pack the files as tar - we need to provide the kernel binary to QEMU

tar: false

The boot.yaml is used to download and extract the kernel binary. In addition, the kernel config is extracted.

# Derive the base configuration

base: base.yaml

# Reset the kernel - should not be installed

kernel: null

# Name of the archive.

name: ubuntu

# Packages to install in the root tarball

packages:

- systemd

- udev # udev will create the device node for ttyS0

- util-linux

# Scripts to configure the root tarball

scripts:

- name: config_root.sh # Name of the script, relative path to this file

env: chroot # Type of execution environment - chfake means fakechroot

The root.yaml defines the root filesystem configuration of the example image. It uses “ubuntu” as name, by default has a minimal root filesystem only consisting of debootstrap and systemd, udev, and util-linux additionally installed, and use the config_root.sh as configuration, which links systemd as /sbin/init.

The amd64 EB corbos Linux images

EB corbos Linux (EBcL) is an embedded Linux distribution targeting automotive and other industrial embedded Linux solutions. The main differences between EBcL and Ubuntu are the release and qualification handling, and some additional components added by EBcL which allow building more lightweight and better performing embedded images.

# Kernel package to use

kernel: linux-image-generic

use_ebcl_apt: true

# CPU architecture

arch: 'amd64'

Again, the base.yaml is used to define the kernel package, the apt repos and the CPU architecture. The EBcL repo can be added using the “use_ebcl_apt” flag.

The boot.yaml is not different to the one used for the Jammy images, and just extracts the kernel binary and configuration form the given kernel package. The image.yaml and the initrd.yaml are also identical to the ones used with the Jammy images.

The amd64 EB corbos Linux systemd image

EBcL supports the systemd init-manager and if startup time and the resource footprint are not too critical, it’s a quite good choice because all of the Ubuntu packages are fully compatible with it, and all services come with their configs for systemd. To run systemd without providing the init-manager using the kernel command line, we can link it as /sbin/init. This is done using the config_root.sh script. The amd64/qemu/ebcl/systemd defines a QEMU image using debootstrap for building the root filesystem. This root filesystem is a very minimal one, only providing systemd, udev and the default command line tools.

The amd64 EB corbos Linux crinit image

EBcL adds crinit init-manger, as an alternative to systemd. Crinit is a much more lightweight init-manager, compared with systemd, and tailored to embedded. Since all the hardware and use-cases are very well known in advance for an embedded system, many dynamic configuration and detection features of systemd can be skipped, which results in a faster and much more lightweight solution. The drawback of using crinit is that the Ubuntu packages are not prepared for crinit, and all service and startup configuration needs to be done by the user.

The necessary minimal configuration to use crinit is contained in images/amd64/qemu/ebcl/crinit/crinit_config, and this folder is copied as overlay to the root filesystem using the root.yaml. The script config_root.sh ensures that the sbin/init script, provided in the overlay, is executable. Instead of systemd, crinit and its command line client crinit-ctl is installed.

Let’s take a closer look at the crinit_config overlay. The sbin/init mounts the /proc filesystem and then runs the crinit init-manager. The /etc folder contains a minimal crinit configuration. The file /etc/crinit/default.series is the main configuration file, and the folder /etc/crinit/crinit.d contains the services we want to run. The task /etc/crinit/crinit.d/agetty-ttyS0.crinit runs agetty on the serial console ttyS0, so that we can login using the QEMU serial console. The task /etc/crinit/crinit.d/earlysetup.crinit sets the hostname, so that we get proper logs. The task /etc/crinit/crinit.d/mount.crinit takes care of mounting the additional filesystems.

The amd64/qemu/ebcl/crinit defines a QEMU image using debootstrap for building the root filesystem. This root filesystem is a very minimal one, only providing crinit.

The amd64 EB corbos Linux server images

The previous images were all very minimal images, only providing enough to boot and login to the system. For developing an embedded system this is the right place to start development, but for exploring and playing with the system it’s too less. The server images provide a more complete user experience and add logging, network, apt and ssh.

The amd64 EB corbos Linux server crinit image

The crinit variant of the server image is contained in images/amd64/qemu/ebcl-server/crinit. In addition to crinit, it provides the elos logging and event manager, which is a lightweight replacement of journald and dbus, which allows automatic log evaluation and event handling. To manage the network interfaces, netifd from the OpenWRT world is used. It’s a very powerful and nevertheless lightweight network manager used in many router solutions. Als NTP client ntpdate is used. To allow remote login openssh-server is added. The image also contains apt to allow easy installation of additional packages, and the typical Linux tools and editors for playing and exploring.

The root_common.yaml is the shared root specification of all the EBcL server variants. It defines the name, the architecture and the common tools and services, like openssh-server. The root.yaml extends the package list with the crinit and elos specific packages, and defines the overlay for the crinit configuration and the config script for the crinit variant. This config_root.sh sets a machine ID, required by elos, and generates a /etc/hosts file.

Let’s take a look at the server configuration. In addition to the /usr/sbin/init, which runs crinit, a ntp_time.sh is provided. This ntp_time.sh does a one-shot NTP time update, as soon as the network is up, to avoid issues with apt and other time sensitive services. The /etc/apt folder provides the apt repository configuration for EBcL and Ubuntu Jammy. The file /etc/config/network/network is evaluated by netifd to bring up the network interfaces. This configuration makes use of an static IPv6 and a dynamic IPv4 configuration. The crinit tasks are extended with tasks to run elos, bring up the network, run the SSH service, and trigger the NTP time update. The file /etc/elos/elosd.json contains some basic elos configuration, to use it as syslog demon. The config /etc/ssh/sshd_config.d/10-root-login.conf enables SSH login is root. The config /etc/gai.conf ensures that IPv4 DNS is preferred over IPv6. The other config files just set some reasonable defaults.

The amd64 EB corbos Linux server systemd image

The folder images/amd64/qemu/ebcl-server/systemd contains a variant of the EBcL server image using systemd as init manager. It’s mainly provided as a reference, to compare the configuration and performance.

The arm64 images

EB corbos Linux comes with arm64 based example images for rpi4 and nxp s32g boards at the moment. To ease development and testing we also provide QEMU arm64 images.

For arm64/qemu we provide example images for EB corbos Linux (EBcL) and for Ubuntu Jammy. The difference between EBcl and Jammy is, that EBcL provides some additional components, like the crinit init-manager and the elos logging and event framework, and that EBcL provides a qualified security maintenance release every three months, while Jammy is proving updates continuously, using less strict qualification and documentation. Additionally there is an example image provided for application development. You can find more about application development in later chapters.

The arm64 Jammy image

In images/arm64/qemu/jammy you can find a basic example image demonstrating how to use the EB corbos Linux SDK. This folder contains the configuration of the example, and makes use of the QEMU images/qemu*.mk include makefiles.

# Kernel package to use

kernel: linux-image-generic

# Apt repositories to use

apt_repos:

- apt_repo: http://archive.ubuntu.com/ubuntu

distro: jammy

components:

- main

- universe

- apt_repo: http://archive.ubuntu.com/ubuntu

distro: jammy-security

components:

- main

- universe

# CPU architecture

arch: 'arm64'

The example makes use of the kernel “linux-image-generic”. This is a meta-package and always takes the latest available Ubuntu Jammy package. The Canonical Ubuntu apt repositories are used to build the examples.

Note that the only difference to the corresponding amd64 image is the arch specification in the last line, all further yaml files for the arm64 Jammy image are identical to the amd64 QEMU jammy image, and hence documented already in the previous section.

The arm64 EB corbos Linux images

EB corbos Linux (EBcL) is an embedded Linux distribution targeting automotive and other industrial embedded Linux solutions. The main differences between EBcL and Ubuntu are the release and qualification handling, and some additional components added by EBcL which allow building more lightweight and better performing embedded images. The code is again very similar to the amd64 QEMU images.

The differences for aarch64 are the adaption of the architecture in base.yaml and in *.mk files.

Supported images

The following images are supported:

- aarch64 EB corbos Linux systemd image

- aarch64 EB corbos Linux crinit image

Their functionality and implementation is analog to the corresponding amd64 images.

The EB corbos Linux for Safety Applications image

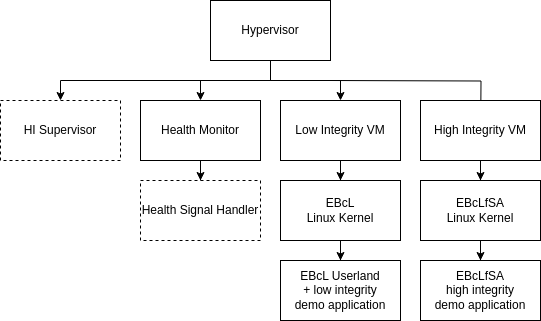

This image (located in images/arm64/qemu/ebclfsa) is an example for a typical EBcLfSA image. It generates an image with the EB corbos Hypervisor running two virtual machines.

One virtual machine (li-vm) is the low integrity VM. This VM is a standard EB corbos Linux using crinit and elos. It can be used just like any other EB corbos Linux image.

The second virtual machine (hi-vm) is the high integrity VM. In a production release applications and the Linux kernel running in this VM are supervised by a component in the hypervisor (HI Supervisor). The supervisor is not part of the free download version. But as long as nothing unexpected happens (e.g. misbehavior of the Linux kernel), it is unlikely that the presence of the supervisor can be noticed. The most noticeable impact of the supervisor is the limitation of allowed syscalls. This however is implemented in the Linux kernel itself in a way, that prints a message to the console, when a prohibited syscall is executed. Note that the list of allowed syscalls is subject to change rapidly during the ongoing development.

This high integrity partition only starts the initial high integrity application, there is no init process (like crinit or systemd) at the moment. In the future this is also subject to change.

Another component running in the hypervisor is the Health Monitor. This component implements a watchdog that is periodically triggered by the high integrity application. In a production environment there would also be a Health Signal Handler that can implement a reaction to missing health indication (e.g. toggle a GPIO pin).

For communication between the low and high integrity applications and between high integrity applications there are shared memory segments provided by the hypervisor. These shared memory segments are called “proxycom” and “hicom” for communication between high and low integrity and between high integrity respectively. The demo application provides an example how to use these shared memory segments for communication.

For the included applications see: EBcLfSA Demo Application.

How to build and run

- Unpack the hypervisor archive you received into the folder results/packages

- Open a terminal in vscode (View -> Terminal -> New Terminal, select “workspace”)

- In the terminal enter

gen_sign_key && GNUPGHOME=/workspace/gpg-keys/.gnupg gen_app_apt_repo && serve_packages(If this was executed already previously, it will ask if the files Release.gpg and InRelease should be overwritten. This has to be confirmed withy). This creates an apt repository and serves the packages on http://localhost:8000. - Navigate to images/arm64/qemu/ebclfsa in the explorer view, right click on ebclfsa and select “Open in Integrated Terminal”

- In the new terminal run

make qemu. This will build the image and run it in qemu. - Refer to the EBcLfSA Demo Application documentation for further information.

Buildprocess

Building the image for ebclfsa uses the same tools as other images and additionally the hypervisor configurator and the demo application. The main differences are:

- It builds two root file systems, one for the high and one for the low integrity virtual machine

- It configures the hypervisor to start the two virtual machines

- It builds and includes the EBcLfSA demo application

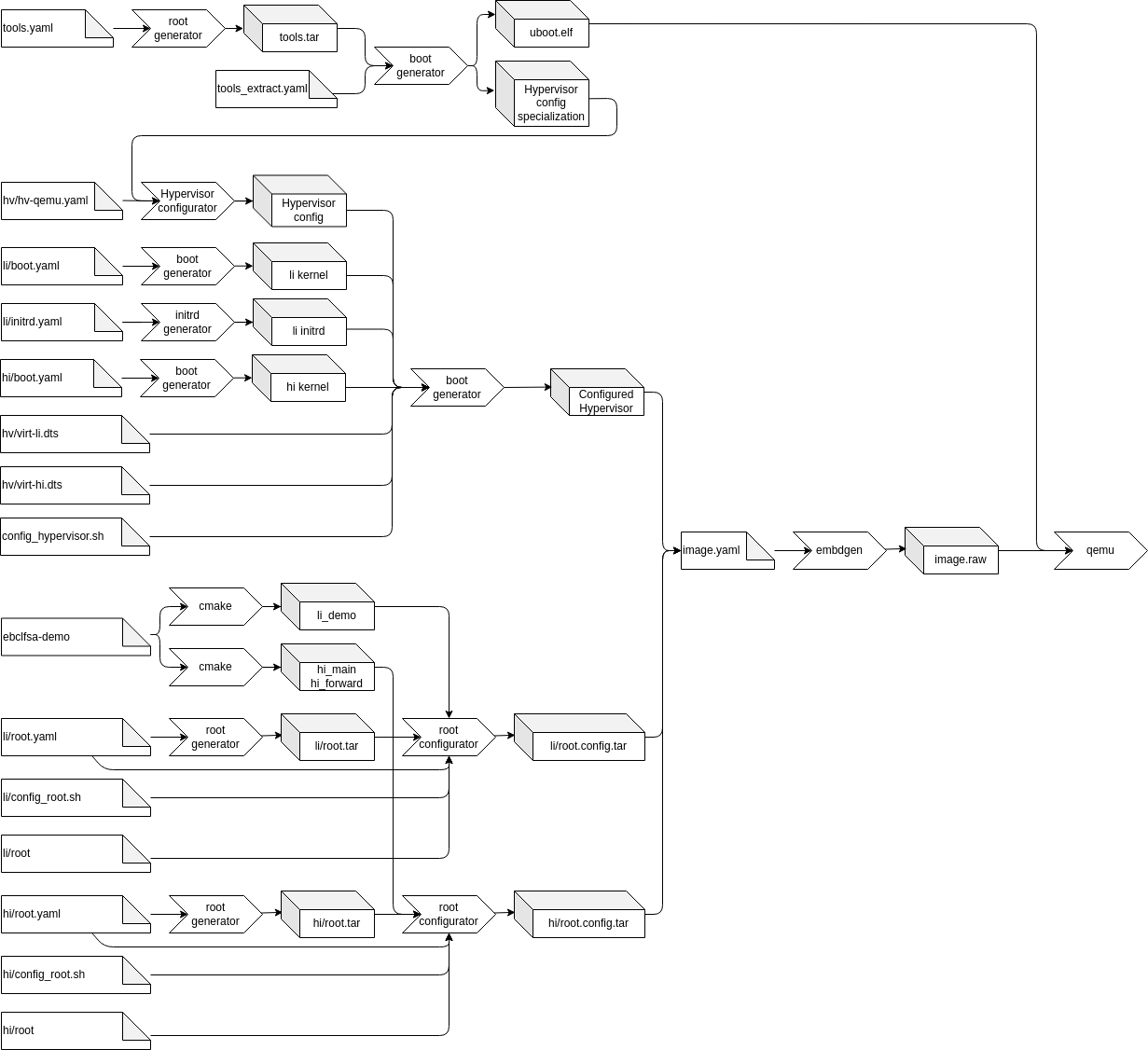

The whole process is depicted in the image below

This documentation will only describe the configuration of the hypervisor.

Hypervisor configuration

Step 1: Extract the hypervisor specialization

As described in the hypervisor config tool description, the tool allows specialization of the configuration model. In the first step a tools root filesystem is generated. The unconfigured hypervisor and the config specialization are installed in this filesystem. At the end of this step the configuration specialization and u-boot used to boot the image are extracted.

Step 2: Generate the hypervisor configuration

In this step the extracted specialization is used together with the configuration yaml file (hv/hv-qemu.yaml) to generate all configuration files.

Parallel to this generation, the linux kernels for the high and low integrity VM are extracted and the initrd for the low integrity VM is generated.

Step 3: Build the final hypervisor

For this step several files are copied into the generated tools filesystem in order to generate the final hypervisor binary. These files are:

- The generated configuration

- The extracted kernels

- The initrd for the low integrity VM

- The device tree sources for the high and low integrity VM

These files are then processed by config_hypervisor.sh inside of the tools root filesystem. First the two device trees are compiled using linux’s device tree compiler, then the configured hypervisor is generated.

At the end of this step the configured hypervisor is extracted from the tools filesystem, so it can be installed onto the boot partition by embdgen.

The Xen Hypervisor EB corbos Linux image

This image (located in images/amd64/qemu/xen) is an example utilizing Xen Hypervisor (https://xenproject.org/). It generates a host image (Dom0, the initial priviledged domain) with the Xen Hypervisor that starts and runs one guest (DomU, unpriviledged domain) image.

How to build and run

- Navigate to images/amd64/qemu/xen in the explorer view, right click on xen and select “Open in Integrated Terminal”

- In the new terminal run

make qemu. This will build the image and run it in qemu. - The console of the guest (DomU) can be accessed from the terminal using command

xl console 1(see Xen management tool documentation at https://xenbits.xen.org/docs/4.16-testing/man/xl.1.html for further details).

Buildprocess

Building the Xen Hypervisor EB corbos Linux image uses the same tools as other images. The main differences are:

- It builds two images: the host image and the guest image.

- The guest image is included in the host image (located in /opt/images/domu.img)

- GRUB is configured to the EFI partition after the image build.

EB corbos Linux example images for the Raspberry Pi 4

EB corbos Linux comes with development support for the Raspberry Pi 4. This means, you can use a Raspberry Pi 4 board for early development and demos, and you get support, but it’s not qualified for mass production. The Raspberry Pi 4 example images make use of the kernel and firmware packages provided by Ubuntu Ports.

# Kernel package to use

kernel: linux-image-raspi

use_ebcl_apt: true

# Additional apt repos

apt_repos:

# Get Ubuntu Raspberry Pi packages

- apt_repo: http://ports.ubuntu.com/ubuntu-ports

distro: jammy

components:

- main

- universe

- multiverse

- restricted

# Get latest security fixes

- apt_repo: http://ports.ubuntu.com/ubuntu-ports

distro: jammy-security

components:

- main

- universe

- multiverse

- restricted

# CPU architecture

arch: arm64

For booting, the Raspberry Pi expects to find a fat32 partition as first partition on the SD card, and this partition is expected to contain the firmware and kernel binaries and devicetrees, and some configuration files. For this image, we make use of the split archive feature of embdgen. This feature allows the distribution of the content of one tarball to multiple partitions. The following image.yaml gets the content of build/ebcl_pi4.config.tar, and puts the content of the /boot folder to the boot partition and puts the remaining content to the root partition.

# Partition layout of the image

# For more details see https://elektrobit.github.io/embdgen/index.html

contents:

- name: archive

type: split_archive

archive: build/ebcl_pi4.config.tar

splits:

- name: boot

root: boot

remaining: root

image:

type: mbr

boot_partition: boot

parts:

- name: boot

type: partition

fstype: fat32

size: 200 MB

content:

type: fat32

content: archive.boot

- name: root

type: partition

fstype: ext4

size: 4 GB

content:

type: ext4

content: archive.root

The commandline.txt and config.txt are just taken from a prebuilt Raspberry Pi OS image.

#!/bin/sh

# Create a hostname file

echo "ebcl-pi4" > ./etc/hostname

# Create /etc/hosts

cat > ./etc/hosts <<- EOF

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

EOF

# Copy Raspi device trees

cp ./usr/lib/firmware/5.15.0-1060-raspi/device-tree/broadcom/bcm2711*.dtb ./boot/

# Copy device tree overlays

cp -R ./usr/lib/firmware/5.15.0-1060-raspi/device-tree/overlays ./boot/

# Copy raspi firmware

cp ./usr/lib/linux-firmware-raspi/* ./boot/

# Copy kernel as the expected name

cp ./boot/vmlinuz-* ./boot/kernel8.img || true

# Copy initrd as the expected name

cp ./boot/initrd.img-* ./boot/initramfs8 || true

# Delete the symlinks

rm ./boot/vmlinuz || true

rm ./boot/initrd.img || true

The shared config_root.sh creates a hostname and hosts file, and makes sure the kernel, bootloader and device trees are available at the expected location and name.

EBcL Raspberry Pi 4 systemd image

The folder images/arm64/raspberry/pi4/systemd contains the systemd variant of the Raspberry Pi 4 image. This image is not a minimal one, but brings what you expect to find in a Raspberry Pi server image. Since we use the split archive feature, we install also the kernel and bootloader package to the root filesystem, which feels a bit simpler and we don’t need to care about the needed kernel modules, but also give a bit more bloated and less secure root filesystem.

base: ../base.yaml

name: ebcl_pi4

packages:

- linux-firmware-raspi

- linux-raspi

- u-boot-rpi

- flash-kernel

- systemd

- systemd-coredump

- systemd-timesyncd

- udev

- util-linux

- netbase

- locales

- file

- findutils

- kmod

- iproute2

- iptables

- iputils-ping

- vim

- nano

- strace

- apt

- openssh-server

- openssh-client

# Scripts to configure the root tarball

scripts:

- name: ../config_root.sh # Name of the script, relative path to this file

env: fake

- name: config_systemd.sh # Name of the script, relative path to this file

env: chroot

host_files:

- source: ../cmdline.txt